This post is the third in a series of posts about the “Fallacious Simplicity of Deep Learning”. I have seen too many comments from non-practitioner who thinks Machine Learning (ML) and Deep Learning (DL) are easy. That any computer programmer following a few hours of training should be able to tackle any problem because after all there are plenty of libraries nowadays… (or other such excuses). This series of posts is adapted from a presentation I will give at the Ericsson Business Area Digital Services technology day on December 5th. So, for my Ericsson fellows, if you happen to be in Kista that day, don’t hesitate to come see it!

In the last posts, we’ve seen that the first complexity lay around the size of the machine learning and deep learning community. There are not enough skilled and knowledgeable peoples in the field. The second complexity lay in the fact that the technology is relatively new, thus the frameworks are quickly evolving and requires software stacks that range from all the way down to the specialized hardware we use. We also said that to illustrate the complexities we would show an example of deep learning using keras. I have described the model I use in a previous post This is not me blogging!. The model can generate new blog post looking like mine from being trained on all my previous posts. So without any further ado, here is the short code example we will use.

In these few lines of code you can see the gist of how one would program a text generating neural network such as the one pictured besides the code. There is more code required to prepare the data and generate text from model predictions, than simply the call to model.predict. But the part of the code which related to create, train and make predictions with a deep neural network is all in those few lines.

You can easily see the definition of each layers: the embedding in green, the two Long Short Term Memory (LSTM) layers, a form of Recurrent Neural Network, here in blue. And a fully connected dense layer in orange. You can see that the inputs are passed when we train the model or fit it, in yellow as well as the expected output, our labels in orange. Here that label is given the beginning of a sentence, what would be the next character in the sequence. The subject of our prediction. Once you trained that network, you can ask for the next character, and the next, and the next… until you have a new blog post… more or less, as you have seen in a previous post.

For people with programming background, there is nothing complicated here. You have a library, Keras, you look at its API and you code accordingly, right? Well, how do you choose which layers to use and their order? The API will not tell you that… there is no cookbook. So, the selection of layers is part our next complexity. But before stating it as such let me introduce a piece of terminology: Hyper-parameters. Hyper-parameters are to deep learning and machine learning any parameter for which value you can vary, but ultimately have to finetune to your data if you want you model to behave properly.

So according to that definition of hyper-parameter, the deep neural network topology or architecture is an hyper-parameter. You must decide which layer to use and in what order. Hyper-parameter selection does not stops at the neural network topology though. Each layer has its own set of hyper parameters.

The first layer is an embedding layer. It converts in this case character input into a vector of real numbers, after all, computers can only work with numbers. How big this encoding vector will be? How long the sentences we train with will be? Those are all hyper-parameters.

On the LSTM layers, how wide or how many neurons will we use? Will we use all the outputs all the time or drop some of them (a technique called dropout which help regularizing neural network and reduce cases of overfitting)? Overfitting is when a neural network learns so well your training examples that it cannot generalize to new examples. Meaning that when you try to predict on a new value, the results are erratic. Not a situation you desire.

You have hyper-parameter to select and tweak up until the model compilation time and the model training (fit) time. How big the tweaking to your neural network weights will be at each computation pass? How big each pass will be in terms of examples you give to the neural network? How many passes will you perform?

If you take all of this into consideration, you end up with most of the code written being subject to hyper-parameters selection. And again, there is no cookbook or recipe yet to tell you how to set them. The API tells you how to enter those values in the framework, but cannot tell you what the effect will be. And the effect will be different for each problem.

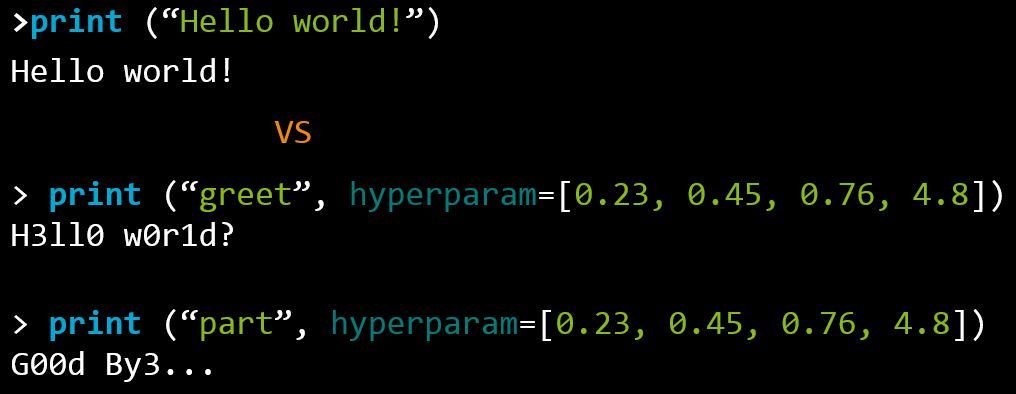

It’s a little bit like if what you would give as argument to a print statement, you know like print(“hello world!”) would not be “hello word”, but some values which would print something based on that value (the hyper-parameter) and whatever has been printed in the past and you would have to tweak it so that at training time you get the expected results!!! This would make any good programmer become insane. But currently there is no other way with deep neural networks.

So our fourth complexity is not only the selection of the neural network topology but as well as all the hyper-parameters that comes with it.

It sometimes requires insight on the mathematics behind the neural net, as well as imagination, lots of trials and error while being rigorous about what you try. This definitely does not follow the “normal” software development experience. As said in my previous post, you need a special crowd to perform machine learning or deep learning.

My next post will look at what is sometime the biggest difficulty for machine learning: obtaining the right data. Before anything can really be done, you need data. This is not always a trivial task…

Nice post. Another aspect of why DL is not so simple is that it will always return an answer. It’s not like a regular program that you can easily and quickly evaluate when it’s broken.

One must interpret/investigate the results and try to explain/find out why it’s working with that architecture. Yes, when it works we may not know why that happened 🙂

Or why is not working although I’m using a well-known architecture and the “same” kind of data?

Also, a good dose of patience and perseverance is mandatory 🙂 since training can take days or weeks until you find out that the decisions you’ve taken made no improvement or even made it worse compared to the previous settings.

Imagine the implications of all these uncertainties during a project. How to estimate the deliveries? Imagine the company culture to absorb that a project may be delayed in weeks/months because the team was working on a DL architecture that after weeks of training resulted in poor results.

It will be interesting to see how stakeholders will handle this reality.

Looking forward to the next post.

LikeLike

Cannot agree more! Training is long and you can’t have certitude as to when you will get a “delivery”. I guess this is one of the reasons why in my group we treat the data science as experiences. Sometime they work, sometime they don’t. If they work then we can look at the industrialization (that part we should be able to more properly estimate as we already have a working model, and know how to train/validate it, etc.). That’s probably the boundary between the Data Scientist or Machine Learning Scientist and the Machine Learning Engineer. The Scientist do the science which might or not work and will take an indefinite time to do. The engineer implements the discovered scientific facts as a product. Making it scale, monitoring it, etc… Still one thing different is that for “no” reason, that implemented system could stop to work properly any time in the future… then you might have to go back to the Scientist for a “new” solution…

LikeLiked by 1 person