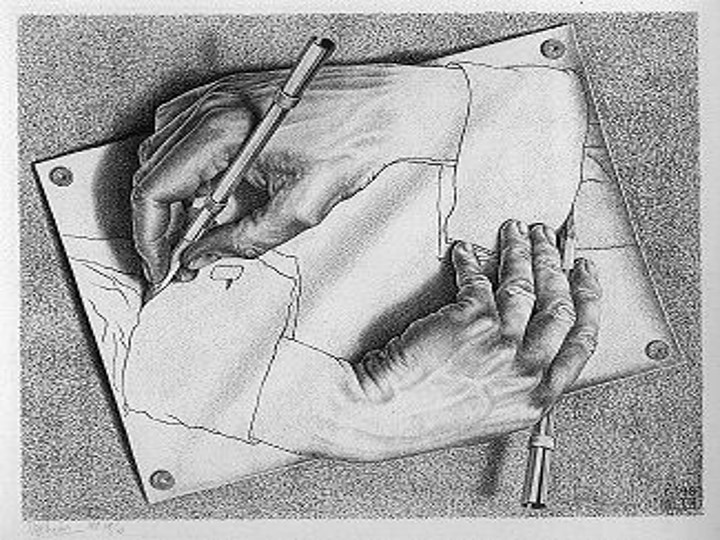

I was recently re-thinking about the flow of ideas and how ideation works. If you want to get up to speed, I suggest you watch Dr. William Duggan TED talk. In a nutshell, “your brain takes things from the outside, and makes connections”. When we think of creative thoughts, your brain takes things from the outside and makes a new combination, we do not create ideas out of the blues, the only out of the blues part, is the new combination. You need to have the parts in your mind prior to creating that new combination. Which parts will you need? How deep do you need to understand those parts? Maybe we can find some answers to that from the patent criteria that requires an “inventive step”. Basically it requires that your invention not be obvious to someone in the field of application. In other words, the “things” you put together and the way you put them together would not appear obvious to people working in your field. This might in many cases discard many common “parts” of your field. You will have to dig parts elsewhere.

There is not a single source of “things/ideas” to feed your internal ideation process, you will get inspired by the many ideas and experiences you have been exposed to. It is the sum of your knowledge in many different fields that will allow you to create those new connections. Maybe the last part you will read about, hear about or experience tomorrow and immediately make a connection that will be invaluable. Maybe you already have all the “things/ideas” parts inside of you, but haven’t experienced the problem that needs solving yet and once you see the problem, the solution will appear clearly to you. There is simply no way of knowing what will be of importance. The only certain aspect is that since it should not be obvious to people working in your field, it certainly is not something you get out of a single study program. It will require a sum of experiences and diverse knowledge, even diverse point of view maybe.

Hear me out, I’m not saying that a new grad cannot be the inventor of a new fantastic combination. However if he is, it will be because of other experiences and learning he had previously or in parallel. In the same way, you can spend a lifetime before knowledge you acquired early in life suddenly creates a new useful combination in your mind. As Victor Hugo was saying: “Nothing is more powerful than an idea whose time has come”. Time might not have been right before but now suddenly the right time for that combination of ideas to create a solution to a problem.

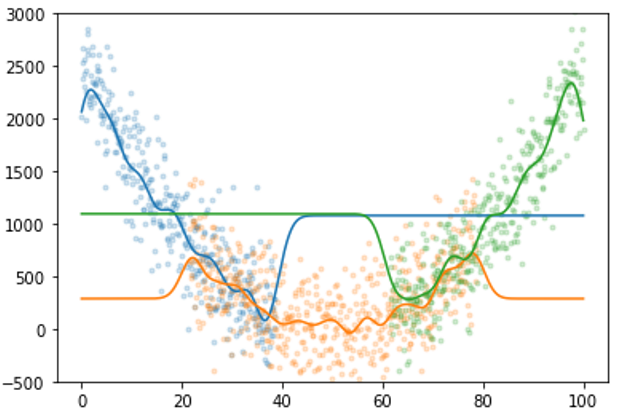

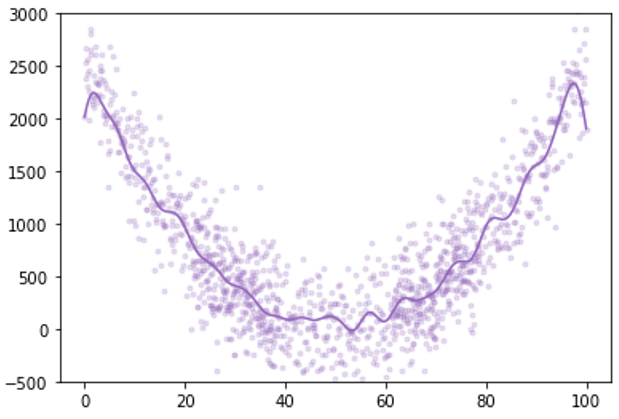

Early in my career I was working in the aerospace industry, more specifically in the simulation field. There I learned the base of simulations. A few years back (you can find it in my past articles), I had a need for simulation of “denizens” moving about their lives in a city as a source of initial data for my work in the telecom industry. There my knowledge of simulation came in handy. My knowledge of open data sources helped me integrate various data inputs in that simulation. When we started comparing the simulation with some real-life examples, we saw ways of characterizing certain elements of the input distributions to better represent real-life. In a way, deep-anonymization through simulation as a potential solution to personal data protection acts around the world.

This didn’t lead to a patent in this case, but solved some of the issues we had at that time. One thing I like to do however, especially for those wacky ideas is to share them. I post part of them in my blog, I do internal presentations with more content, etc.That way those ideas continue to live on in others mind. Recently I’ve seen a presentation from colleagues where they have plans to create higher detail simulations of peoples walking about their lives and “virtually” interacting with real cloud based telecom equipment to have a high fidelity simulation of reality. Maybe nothing from my previous work has anything to do with that, or maybe presenting my ideas, writing a blog about that made someone think at a point in time, and now is the time for that idea to become powerful.

I’ve always been highly interested in the process of generating ideas, ideation if you will. At some point in my career I saw the need in my local company for a tool supporting ideation. I was inspired a lot by an article I read at the time about a company which implanted a “stock market of ideas”. We created that tool and what we discovered is that beyond the tool, the process of handling those ideas is central to making something out of them. Eventually the tool we created spread to other countries and got some attention from the headquarter. Ericsson created a company-wide equivalent of our tool. Creation to which we participated by sharing our experiences, our view on the important requirements on the tool we did.

Those are only two anecdotal examples. I could give you a lot more of those, and I’m sure many of you have such experiences. The central point is that new ideas come from connecting past ideas together. Those ideas are not all “your” ideas, but you were exposed to them because someone communicated them, or you experienced them. It is the sum of your experience that helps you come up with new interesting combinations when the time is right and it is communicating your ideas that make it possible for others to build new interesting combinations in the future.

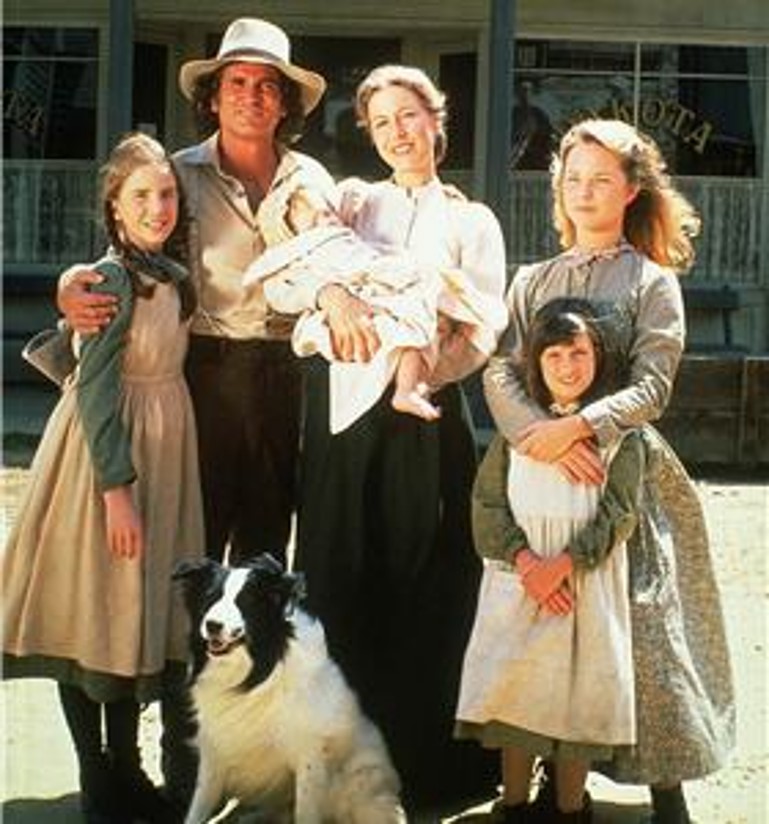

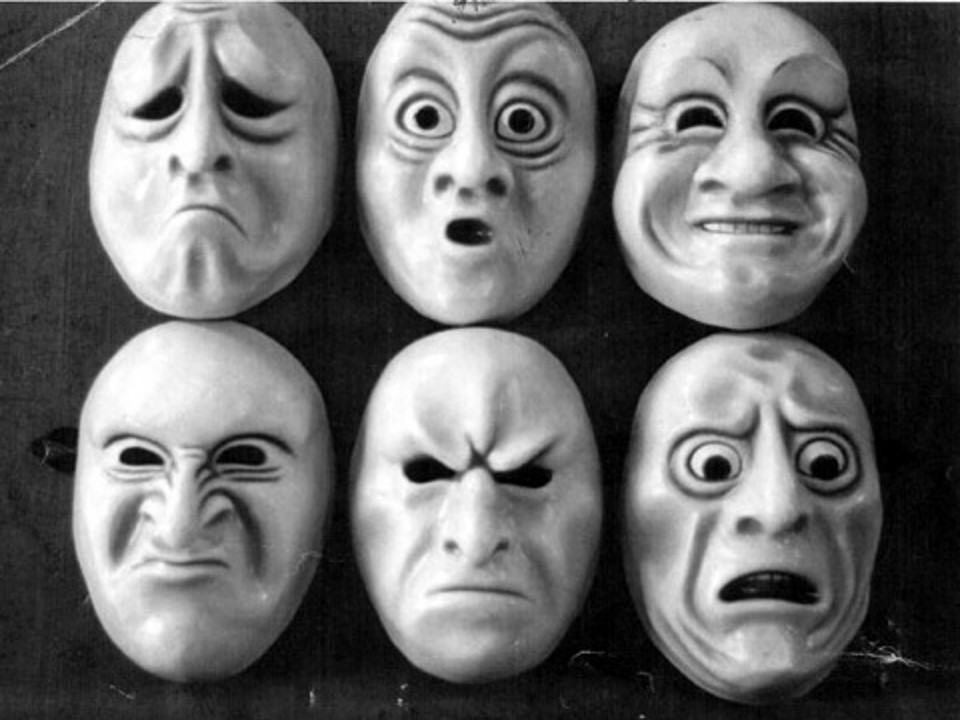

Hopefully you now understand the point of view expressed in the title of this article. Ageism, whether it is discarding new ideas/parts from young ones, or firing older employees (we often hear that you cannot stay in tech past 50 year old) is a sure way of killing your sources of ideas/parts and potential to assemble those ideas/parts into new useful combinations when the time is right. New grads come with new ideas/parts from the most recent technology. Older employees have experienced a lot of technologies (and most probably a lot of the new ones as well), made errors and learnt from those. They can probably anticipate some of the problems that may come through the usage of new combinations of ideas, and have a large pool of experience to propose new combinations when the time is right.

The second point I hope I made clear is that thought leadership ideas sharing is an essential part to expose others and expose yourself to ideas that later may become the source of new combinations you need. Through exposing your ideas, you create a vehicle to discuss them with a broad range of people and experiences. You enrich others as well as enriching yourself and enabling future combinations to come to fruition. We do not live in close bubbles. It is idea sharing that enables us to sit on the shoulders of giants that came before us, and maybe, become the giants the next generation of ideas will sit on.

Cover image by Anemone123 at Pixabay.